Can you trust ChatGPT to keep your data secure?

News from OpenAI that a bug allowed some users to see titles from another user’s chat history raises the question of whether this constitutes a data breach and if you can trust ChatGPT to keep your data private.

This incident highlights the risk of a potential data breach, where unauthorised access could lead to privacy compromises, identity theft, and the misuse of personal information.

The answer is no, and ChatGPT has helpfully explained why this is the case. As in my previous posts about why it is important to verify your GPT results, I asked my AI colleague Richard Davies to verify the answer generated.

Content:

How ChatGPT uses AI training to improve its responses

Why does ChatGPT security matter to protect user data?

ChatGPT explains why it is best to avoid sharing sensitive data

A trust relationship may not exist with ChatGPT

ChatGPT’s data handling practices

Does ChatGPT save user data, and if so, why

MUSK and Scientists call for halt to AI race

How to use GPT4 AI securely with strict access controls

How ChatGPT uses AI training to improve its responses

ChatGPT’s AI training is a continuous process that involves learning from user interactions and adapting to new patterns and trends. The more users interact with ChatGPT, the more data it collects, and the more accurate its responses become.

This training process is important in improving ChatGPT’s ability to understand nuances of language, idioms, and context-specific expressions.

Why does ChatGPT security matter to protect user data?

To understand why the ChatGPT bug and data security matters, it is helpful to consider the wider consequences of the bug.

- A deeper investigation by OpenAI revealed the bug may have caused the unintentional visibility of payment-related information of 1.2% of the ChatGPT Plus subscribers who were active during a specific nine-hour window, potentially allowing unauthorized access to sensitive user data.

This exposure could potentially allow unauthorised individuals to access sensitive user data, highlighting the importance of strict access controls and other security measures.

To prevent such incidents, OpenAI has implemented robust security measures including encryption, strict access controls, external security audits, and incident response plans.

Now, if you have been one of the millions of unfortunate victims of identity theft, you will know how worrying it is to have your payment details compromised. OpenAI have patched the bug and there is no mention at this time of any theft of sensitive data or fraud resulting from the bug.

Get more insights on ChatGPT security best practices: A comprehensive guide.

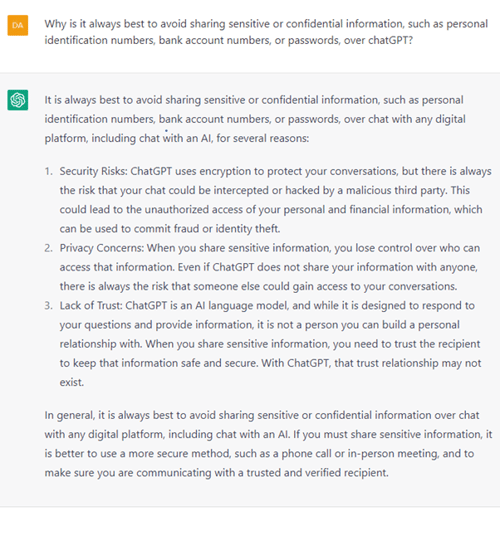

ChatGPT explains why it is best to avoid sharing sensitive data

ChatGPT had told me that it was always best to avoid sharing sensitive or confidential information, such as personal identification numbers, bank account numbers, or passwords, over ChatGPT. I asked the bot why this was, and it generated the following answer:

It is always best to avoid sharing sensitive or confidential information, such as personal identification numbers, bank account numbers, or passwords, over chat with any digital platform, including chat with an AI, for several reasons:

- Security Risks: ChatGPT uses encryption to protect your conversations, but there is always the risk that your chat could be intercepted or hacked by a malicious third party. This could lead to the unauthorized access of your personal and financial information, which can be used to commit fraud or identity theft.

- Privacy Concerns: When you share sensitive information, you lose control over who can access that information. Even if ChatGPT does not share your information with anyone, there is always the risk that someone else could gain access to your conversations.

- Lack of Trust: ChatGPT is an AI language model, and while it is designed to respond to your questions and provide information, it is not a person you can build a personal relationship with. When you share sensitive information, you need to trust the recipient to keep that information safe and secure. With ChatGPT, that trust relationship may not exist.

In general, it is always best to avoid sharing sensitive or confidential information over chat with any digital platform, including chat with an AI.

If you must share sensitive information, it is better to use a more secure method, such as a phone call or in-person meeting, and to make sure you are communicating with a trusted and verified recipient.

A trust relationship may not exist with ChatGPT

ChatGPT helpfully points out that a trust relationship may not exist, which makes sense because you are communicating with an AI language model that is designed to respond in a conversational manner.

- There is also a risk of unauthorised access to confidential and sensitive business information when using ChatGPT, as it could lead to the leakage of proprietary and trade secret information.

My colleague Richard points out, “ChatGPT may sound human-like but it does not have the capacity for personal relationships, trust, or emotions” adding, “I have predicted that it is only a matter of time before someone falls in love with a ChatBot, because they feel it understands them better than a human”

ChatGPT’s data handling practices

As a user, it’s essential to understand how ChatGPT handles your data.

- Does it save your conversations?

- Does it share your data with third parties?

Let’s dive into the details.

Does ChatGPT save user data, and if so, why

Yes, ChatGPT does save user data but only for specific reasons. When you chat with ChatGPT, your conversations are saved on OpenAI’s servers to improve the AI model. This data is used to train the model, find patterns and generate more accurate responses. But ChatGPT does not sell or share user data with third parties without explicit consent.

ChatGPT’s data handling is designed to protect user data and keep it confidential. We use end-to-end encryption to encrypt conversations and have strict access controls to prevent unauthorized access to user data. ChatGPT also complies with regional data protection regulations like GDPR in the EU and CCPA in the US.

- But ChatGPT is not immune to security issues and vulnerabilities. Like any online service there is a risk of data breaches, unauthorized access and misuse of sensitive info. To mitigate these risks ChatGPT has implemented robust security measures like encryption, access controls and regular security audits.

ChatGPT is a powerful AI chatbot that uses AI training to improve its responses and provides a secure and confidential space for user interactions. It does save user data but you need to understand the purpose and limitations of data collection. By knowing ChatGPT’s data handling you can use the platform with confidence and take steps to protect your sensitive info.

MUSK and Scientists call for halt to AI race

Romantic liaisons with a ChatBot may be a little way off, but concerns that the race to roll-out AI is out of control, has caused Elon Musk and others to call for a 6 month pause. In a letter to the tech giants, the scientists warn that AI systems with “human-competitive intelligence can pose profound risks to society and humanity”.

- Additionally, the challenge of complying with the General Data Protection Regulation (GDPR) in the context of AI development adds another layer of complexity for organisations.

A big concern is the risk of flooding the internet with disinformation that could result in catastrophic future risks out of the realms of science fiction.

How to use GPT4 AI securely with strict access controls

As ChatGPT explains, ‘If you must share sensitive information or chat over a digital platform, it is better to use a more secure method and make sure you communicate with a human who is trusted and verified’.

Generative AI like GPT4 is a revolutionary technology that is already giving companies a competitive edge because it can improve processes. This can be done safely when AI is trained on data sets that are trusted and controls are in place to protect your data. It is crucial to utilize data controls to manage and control your data usage within the ChatGPT platform.

- Understanding and staying informed about the company's privacy and data retention policies is essential when using AI systems like ChatGPT. This includes reviewing privacy policies, using anonymous accounts, and monitoring data retention policies to protect personal information.

Protecting user data is paramount, and it involves ensuring that the collection, storage, and handling of user data are secure. This includes encryption, security measures, and compliance with data protection regulations to safeguard user privacy.

OpenAI uses Kenyan workers to verify results

According to Time magazine, OpenAI has employed Kenyan workers on less than $2 per hour to ensure ChatGPT does not create toxic answers. OpenAI explains that human moderators can see responses generated by ChatGPT during their review process.

The moderators were tasked with reviewing and labelling vast amounts of text data to identify and classify harmful content, such as hate speech, violence, and sexual abuse.

How to verify GPT results with a certified expert

It is now possible to deploy new GPT technology safely and verify results using a certified human expert. An example of this is the new GAI AI-Translation product developed in partnership with Sheffield Hallam University.

- The translation results generated by GAI are authentic because the machine learning model is trained on a vast private data lake that is trusted and verified. Therefore, it does not create the errors and bias that results from models trained on data sets scraped from the internet.

Global companies that use human and AI generated content want the assurance of a trusted linguist to guarantee tone of voice is perfect. Certified linguists who have been tested and verified are selected to perform this task.

Due to new advances like the Expert-in-the-Loop feature in GAI, it is now possible for companies to seamlessly commission their professional linguists online.

The AI race may pause but it has not stopped

Big tech companies may well heed the call from Elon Musk to pause the race to roll-out AI but behind the scenes, the race is well underway. One of the key ethical considerations in this race is whether to share user data, as it raises significant privacy concerns and the need for strict confidentiality measures.